Multimodal Conversation Analysis with Experts: SSP Project

Core Collaborators: Kiyosu Maeda (Princeton University), Hiromu Yakura (Max-Planck Insitute for Human Development)

Period: 2018.4 - Current

Background and Goal

Face-to-face human communication is a complex process involving both verbal and nonverbal behaviors, such as gestures, facial expressions, and body language. Beggining with Charles Darwin, researchers have recognized these behaviors as spontaneous and unregulated expressions of internal states. Today, experts analyze these signals for applications in coaching, counseling, and human resource interviews. However, mastering these skills requires years of experience and often relies on subjective judgment. In this Social Signal Processing (SSP) project, we explore whether computers can interpret the complex dynamics of human communication and support experts through effective human-AI collaboration.

Journey

We examined the role of AI in self-reflection and leadership development. [C.19] Coaching Copilot evaluated the effectiveness of state-of-the-art LLM-based chatbots in supporting leadership growth. While these AI systems can facilitate structured self-reflection, our findings emphasize that they should complement rather than replace human coaches. Human coaches offer unique advantages, such as interpreting subtle behavioral cues and providing context-aware interventions, reinforcing the importance of face-to-face coaching.

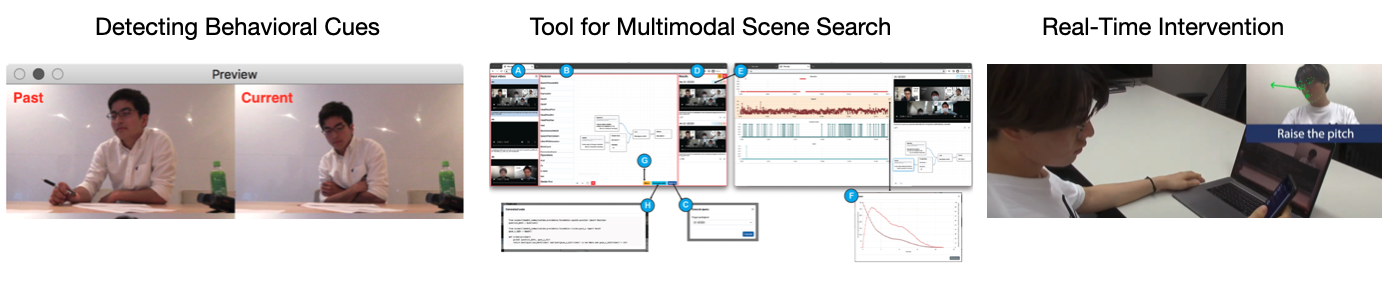

[C.1] REsCUE and [C.2] INWARD were our first attempts at integrating multimodal behavioral sensing to augment such professional dialogue. These projects developed tools for executive coaching, leveraging multimodal anomaly detection and video reflection to enhance both efficiency and effectiveness. The approach successfully combined human and computational intelligence, aiding human coaches in decision-making. Its efficacy was further validated in other domains, such as human assessment, as demonstrated in our case study paper [W.7] AI for Human Assessment.

Building on these findings, we recognized the need to enhance the objectivity of multimodal analysis by making experts' implicit knowledge explicit and programmable. This led to [J.8] ConverSearch, a multimodal scene search tool that employs a visual programming paradigm to encode human knowledge for reuse. By structuring expertise in a programmable manner, this work laid the foundation for more systematic and scalable human-AI collaboration.

With multimodal analysis providing a strong foundation, we then explored how AI-mediated interventions could support real-time communication. [C.8] Mindless Attractor introduced an auditory perturbation technique to subtly draw attention in communication settings. In this way, we continue to advance the fields of SSP and HCI by exploring the effective use of multimodal behavioral analysis across diverse dialogue contexts.

Impact

Our inventions have been licensed to several companies in various domains, such as coaching, assessment, education, sales, etc. For instance, our multimodal behavior analysis technology has been deployed into a SaaS product, ACES Meet, and has analyzed more than 1 million meetings as of 10/2024.

Acknowledgments

We sincerely appreciate our industrial collaborators for providing us with invaluable research opportunities.

Links

Research Publications

- [W.9] AI for Meeting Minutes: Promises and Challenges in Designing Human-AI Collaboration on a Production SaaS Platform, CHI2025 case study

- [J.8] ConverSearch: Supporting Experts in Human Behavior Analysis of Conversational Videos with a Multimodal Scene Search Tool, TiiS2024

- [C.19] Coaching Copilot: Blended Form of an LLM-Powered Chatbot and a Human Coach to Effectively Support Self-Reflection for Leadership Growth, CUI2024

- [W.7] AI for human assessment: What do professional assessors need?, CHI2023 case study 🥇

- [C.8] Mindless Attractor: A False-Positive Resistant Intervention for Drawing Attention Using Auditory Perturbation, CHI2021 🥇

- [C.2] INWARD: A Computer-Supported Tool for Video-Reflection Improves Efficiency and Effectiveness in Executive Coaching, CHI2020

- [C.1] REsCUE: A framework for REal-time feedback on behavioral CUEs using multimodal anomaly detection, CHI2019