Context-Aware, Mixed-Initiative Support for Procedural Tasks: PrISM Project

Advisors: Mayank Goel (CMU), Jill Fain Lehman (CMU), Bryan T. Carroll (University Hospitals of Cleveland Department of Dermatology)

Core Collaborators: Vimal Mollyn (CMU), Prasoon Patidar (CMU), Shreya Bali (CMU), Hiromu Yakura (Max-Planck Insitute for Human Development)

Period: 2021.9 - Current

Background and Goal

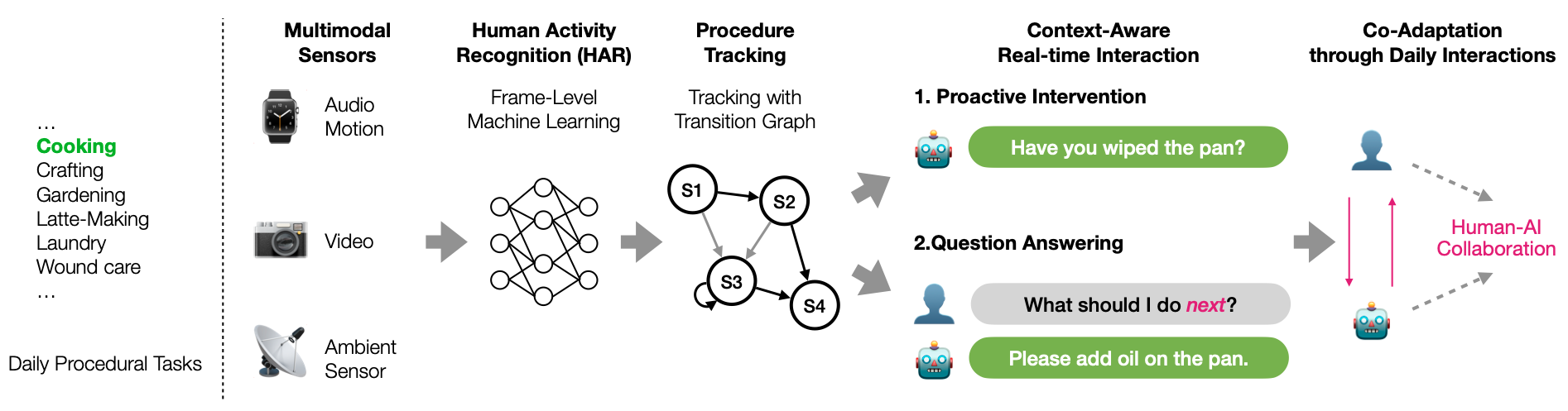

Every day, we perform many tasks, including cooking, DIY, and medical self-care (like the COVID-19 self-test kit), which involve a series of discrete steps. Accurately executing all the steps can be difficult; when we try a new recipe, for example, we might have questions at any step and might make mistakes by skipping important steps or doing them in the wrong order. This project, Procedural Interaction from Sensing Module (PrISM), aims to support users in executing these kinds of tasks through dialogue-based interactions. By using multimodal sensors such as a camera, wearable devices like a smartwatch, and privacy-preserving ambient sensors like a Doppler Radar, an assistant can infer the user’s context and provide contextually situated help. From an HCI perspective, we explore the effectiveness of real-time human-AI collaboration in fostering user independence and facilitating skill acquisition.

Approach

Our framework is designed to be modular and flexible, allowing us to integrate various sensors and interactions for each specific situation. The underlying technology is multimodal Human Activity Recognition (HAR) and step tracking ([J.4] PrISM-Tracker), which allow the assistant to understand the user’s current step within the task. A variety of sensors can be used. Right now, we mainly use a smartwatch for its ubiquity, minimal privacy concerns compared to camera-based systems, and capability for monitoring a user across various daily activities.

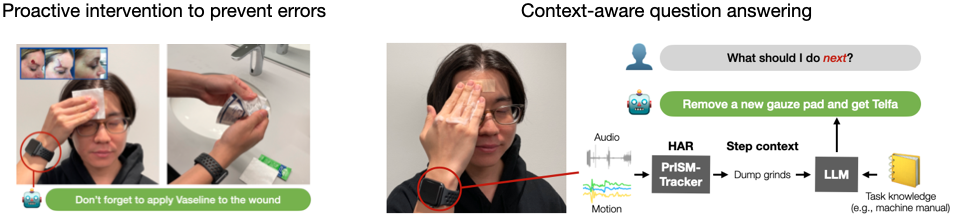

Then, the assistant offers two types of interactions: question answering ([J.7] PrISM-Q&A) and proactive intervention ([C.20] PrISM-Observer). In Q&A, the assistant answers the user's questions (e.g., "What should I do next?"), where Large Language Model (LLM)s is complemented by step context to generate more accurate responses. In Observer, the assistant proactively intervenes when it detects that the user is about to make mistakes (e.g., "Have you wiped the pan?") by probabilistically modeling and forecsting user behavior using HAR.

Finally, we enable the assistant to leverage user-assistant dialogue as feedback to improve its supporting strategies ([C.22] Scaling PrISM). Mackay introduced the idea of a human-computer partnership, where humans and intelligent agents collaborate to outperform either working alone. Inspired by the idea, we proposed ways to enable our assistant to dynamically adapt through interactions after deployment. This helps the assistant improve context understanding and find a comfortable control balance, which also shifts over time, by exploring the mixed-initiative interaction design.

Impact

We are collaborating with medical professionals to apply our PrISM assistant in the medical context. One project is to support post-operative skin-cancer patients in their wound care, where the assistant helps them follow the prescribed wound care procedure for at-home recovery. Another project is to support patients with dementia in their daily routines, such as kitchen activities. Check out our Autonomous Project.

📣 We are excited to apply our assistant to more settings. Let us know if you are interested in collaborating with us!

Acknowledgments

This project is supported by NSF (No. 2406099), Center for Machine Learning and Health (CMLH) and Masason Foundation in part. The development of the PrISM assistant for health applications is in collaboration with University Hospitals of Cleveland Department of Dermatology and Fraunhofer Portugal AICOS.

Links

Research Publications

- [C.22] Scaling Context-Aware Task Assistants that Learn from Demonstration and Adapt through Mixed-Initiative Dialogue, UIST2025

- [J.7] PrISM-Q&A: Step-Aware Voice Assistant on a Smartwatch Enabled by Multimodal Procedure Tracking and Large Language Models, IMWUT2024

- [C.20] PrISM-Observer: Intervention Agent to Help Users Perform Everyday Procedures Sensed using a Smartwatch, UIST2024

- [J.4] PrISM-Tracker: A Framework for Multimodal Procedure Tracking Using Wearable Sensors and State Transition Information with User-Driven Handling of Errors and Uncertainty, IMWUT2022